Overview

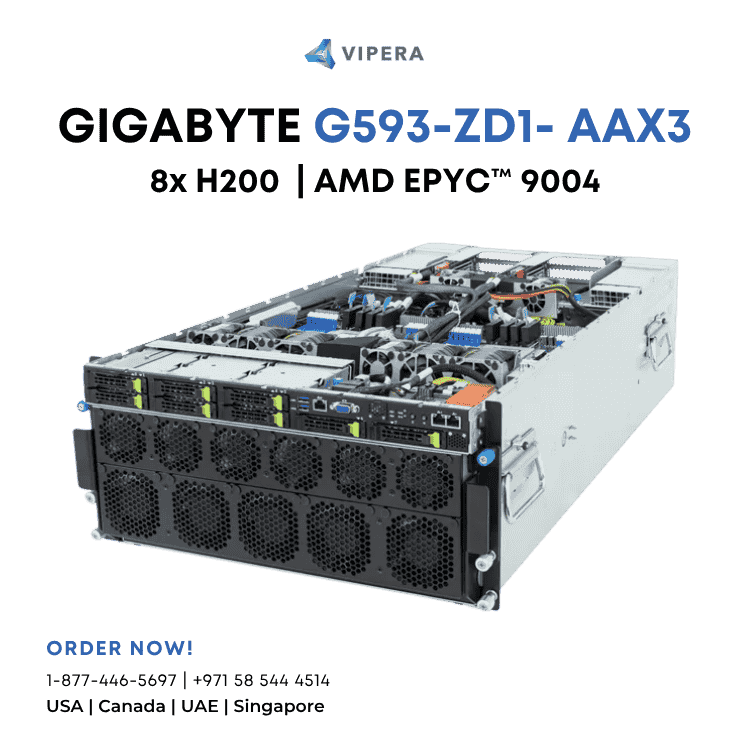

High-performance 2u rackmount server with 8x NVIDIA H200 SXM GPUs connected via NVLink. Optimized for large-scale LLM training and inference workloads requiring maximum GPU memory bandwidth.

Key Features

- 8x NVIDIA H200 SXM GPUs with NVLink

- High-bandwidth HBM3/HBM3e memory for LLM workloads

- NVSwitch fabric for all-to-all GPU communication

- Dual-socket CPU platform

- Enterprise redundant power and advanced cooling

Ideal For

AI teams requiring enterprise-grade infrastructure for LLM training, fine-tuning, and high-throughput inference.

Feature

900GB/s GPU-to-GPU bandwidth with NVIDIA NVLink™ and NVSwitch™

Feature

2 x 10Gb/s LAN ports via Intel® X710-AT2

Feature

4+2 3000W 80 PLUS Titanium redundant power supplies

$339,000.00

Prices may vary. Verify on vendor site.

Quick Specs

- Feature

- 4+2 3000W 80 PLUS Titanium redundant power supplies

Tags

llm-trainingllm-inferencegenerative-ai