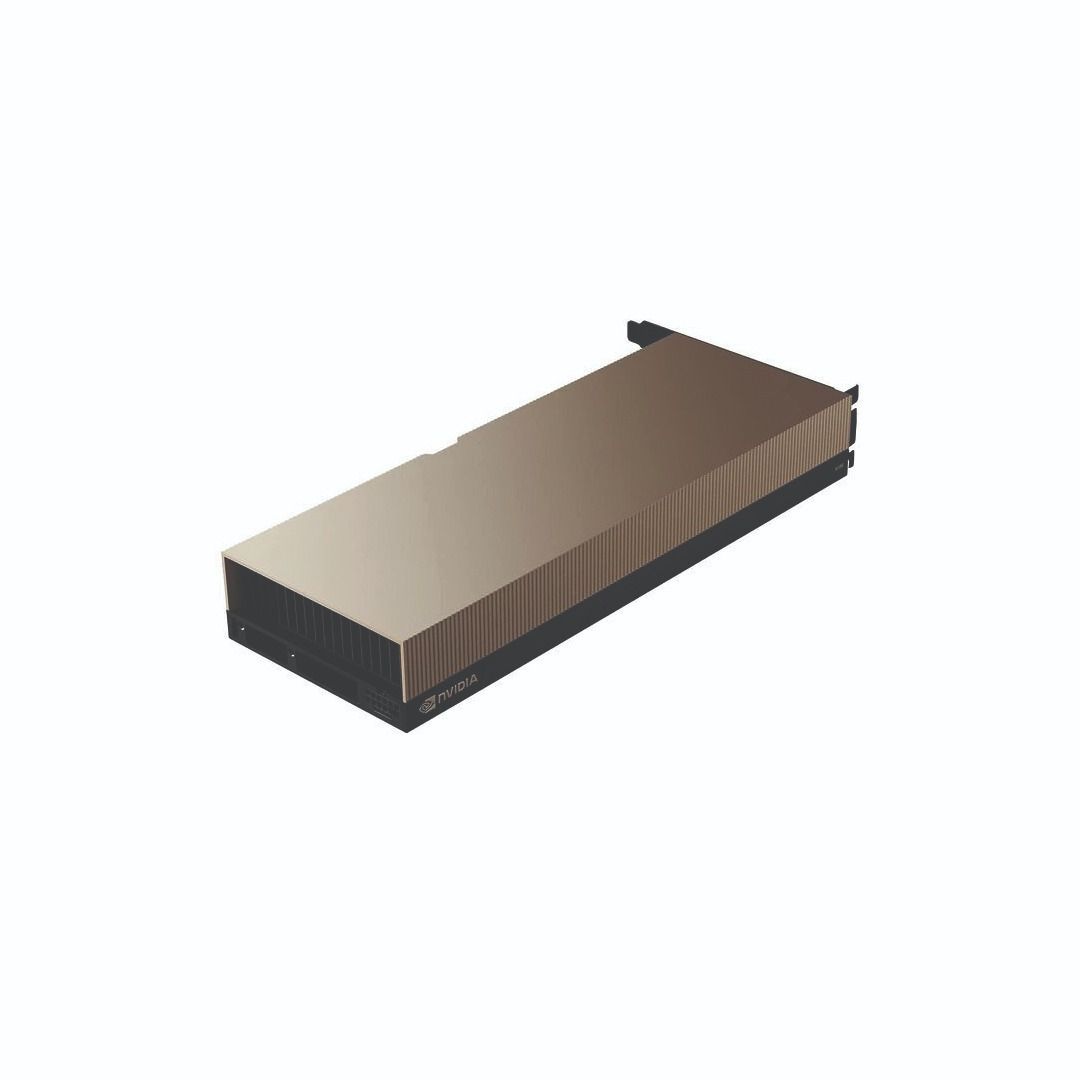

Nvidia 900-21010-0040-000 H200 NVL Graphics CardPassive Cooling 141 GB PCIe

Central Computer

Overview

2u rackmount server. Optimized for AI inference and enterprise compute workloads.

Key Features

- H200 with 141GB VRAM

- NVIDIA NVLink GPU interconnect

- 2u rackmount form factor

Ideal For

enterprise teams and developers and research institutions running large-scale AI training, multi-GPU workloads, and enterprise inference deployments.

FP64

34 TFLOPS

FP64 Tensor Core

67 TFLOPS

FP32

67 TFLOPS

TF32 Tensor Core2

989 TFLOPS

BFLOAT16 Tensor Core2

1,979 TFLOPS

FPl 6 Tensor Core2

1,979 TFLOPS

FPS Tensor Core2

3,958 TFLOPS

INTS Tensor Core2

3,958 TFLOPS

GPU Memory

141GB

GPU Memory Bandwidth

4.8TB/s

Decoders

7 NVDEC7 JPEG

Confidential Computing

Supported

Max Thermal Design Power (TDP)

Up to 600W (configurable)

Multi-Instance GPUs

Up to 7 MIGs @l 8GB each

Form Facto

PCIe

Interconnect

2- or 4-way NVIDIA NVLink bridge: 900GB/s PCle Gens: l 28GB/s

Server Options

NVIDIA MGX"' H200 NVL partner and NVIDIA-Certified Systems with up to 8 GPUs

NVIDIA Al Enterprise

Included

$29,999.99

Prices may vary. Verify on vendor site.

Quick Specs

- FP64

- 34 TFLOPS

- FP64 Tensor Core

- 67 TFLOPS

- FP32

- 67 TFLOPS

- TF32 Tensor Core2

- 989 TFLOPS

- BFLOAT16 Tensor Core2

- 1,979 TFLOPS

- FPl 6 Tensor Core2

- 1,979 TFLOPS

Tags

llm-traininggenerative-ai