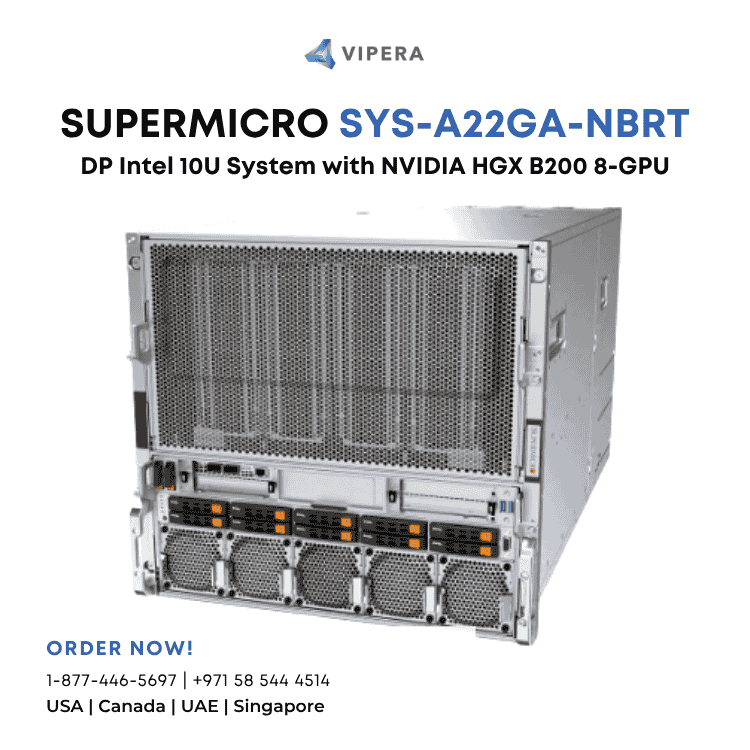

Overview

Enterprise 2u rackmount server featuring 8x NVIDIA B200 GPUs with HGX platform. Designed for next-generation AI training with Blackwell architecture delivering unprecedented FP4/FP8 performance for large language model workloads.

Key Features

- 8x NVIDIA B200 GPUs on HGX baseboard

- NVLink 5.0 interconnect for 1.8TB/s GPU-to-GPU bandwidth

- Blackwell architecture with FP4/FP8 transformer engine

- Up to 141GB HBM3e memory per GPU

- Enterprise-grade redundant power and cooling

Ideal For

Large enterprises and hyperscalers requiring maximum AI training performance for frontier model development.

Feature

8x NVIDIA SXM B200 GPUs, 1.4TB of GPU memory space

Feature

24x DIMM slots, ECC RDIMM / MRDIMM DDR5 up to 8800MT/s (1DPC)

Feature

2x 10GbE RJ-45, 1x Dedicated BMC/IPMI

$495,000.00

Prices may vary. Verify on vendor site.

Quick Specs

- Feature

- 2x 10GbE RJ-45, 1x Dedicated BMC/IPMI

Tags

llm-traininggenerative-aihpc